Ah, controversy! Physics is of course not immune from it, and sometimes the participants in an argument can let anger get the better of them.

An example of this began last week, when the following video clip appeared, featuring Professor Brian Cox explaining to a lay audience the Pauli exclusion principle:

For reasons that I will try and elaborate on in this post, this short video was, to say the least, eyebrow-raising to me. Tom over at Swans on Tea picked up on the same video, and wrote a critique of it with the not quite political title, “Brian Cox is Full of **it“, in which he explained his initial critique of the video based on his own knowledge. I piped in with a comment,

Well put. I just saw this clip the other day and it was an eyebrow-raiser, to say the least. I thought I’d mull over the broader implications a bit before writing my own post on the subject, but you’ve addressed it well.

A more technical way to put it, if I were to try, is that the Pauli principle applies to the *entire* quantum state of the wavefunction, not just the energy, as Cox seems to imply. This is why we can, to first approximation, have two electrons in the same energy level in an atom: they can have different “up/down” spin states. Since the position of the particle is part of the wavefunction as well, electrons whose spatial wavefunctions are widely separated are also different.

Well, apparently being criticized was a bit upsetting for Professor Cox, because he fired off the following angry comment to both myself and Tom:

“Since the position of the particle is part of the wavefunction as well, electrons whose spatial wavefunctions are widely separated are also different.” What on earth does this mean? What does a wave packet look like for a particle of definite momentum? Come on, this is first year undergraduate stuff.

…

I’m glad that you, Tom, don’t need to know about the fundamentals of quantum theory in order to maintain atomic clocks, otherwise we’d have problems with our global timekeeping!

So, he basically insults both Tom and I in the course of several paragraphs, without addressing the comments at all, really. It gets worse. In addition to me later being referred to as “sensitive” by the obviously sensitive Dr. Cox (cough cough projection cough), he doubles down on his anger by referring on Twitter to the lot of those criticizing him (including Professor Sean Carroll of Cosmic Variance) as “armchair physicists”.

Well, there have been a number of responses to Cox’s angry rant, including a response on the physics from Sean Carroll and a further elaboration by Tom on his own case at Swans on Tea. I felt that I should respond myself, at the very least because I’ve been accused of not understanding “undergraduate physics” myself, but also because the “everything is connected” lecture in my opinion represents a really dangerous path for a physicist to go down.

We’ll take a look at this from two points of view; first, I’d like to comment on the style of Cox’s response to criticism, and then on the more important substance of the discussion.

First, on the style. When your response to criticism from research physicists is that they don’t understand undergraduate physics and that they are “armchair physicists”, you’ve basically admitted that you’ve lost the argument*. Though scientists certainly get into petty spats far too often, typically sparked by research disagreements, it is not considered a good thing. It is especially bad form for someone who is representing the field in a very public way to whine and name call: it is a very poor showing of what science is supposed to be all about.

Okay, let’s get to the substance! In order to get into the meat of the issue, I should say a few words about the quantum theory, since I don’t discuss it very often on this blog. Dr. Francis talks a bit about one of the issues — entanglement — over at Galileo’s Pendulum, also in reaction to this “controversy”.

Up through the late 19th century, “classical” physics served very well in describing the universe. As researchers started to investigate the behavior of matter on a smaller scale, they began to encounter phenomena that couldn’t be explained by the existing laws, such as the structure of the atom (more on this in my old post here).

Many of these issues were spectacularly resolved by the hypothesis that subatomic particles such as the electron and proton are not in fact point-like objects but possess wave-like properties. This idea was introduced by the French physicist Louis de Broglie in his 1924 doctoral thesis, and it naturally explained such phenomena as the discrete energy levels that electrons in atoms possess. The wave properties of matter can be demonstrated dramatically by using electrons in a Young’s double slit experiment; the electrons exiting the pair of slits produce a wave-like interference pattern of bright and dark bands, just like light.

But this explanation raised a natural and difficult question: what, exactly, is the nature of this electron wave? An example of the difficulties is provided by the electron double slit experiment. Individual electrons passing through the slits don’t produce waves; they arrive at a discrete and seemingly random points on the detector, like a particle. However, if many, many electrons are sent through the same experiment, one finds that the collection of them form the interference pattern. This was shown quite spectacularly in 1976 by an Italian research group**:

How do we explain that individual electrons act like particles but many electrons act like waves? The conventional interpretation is known as the Copenhagen interpretation, and was developed in the mid-1920s. In short: the wavefunction of the electron represents the probability of the electron being “measured” with certain properties. When a property of the electron is measured, such as its position, this wavefunction “collapses” to one of the possible outcomes contained within it. In the double slit experiment, for instance, a single electron (or, more accurately, its wavefunction) passes through both slits and has a high probability of being detected at one of the “bright” spots of the interference pattern and a low probability of being detected at one of the “dark” spots. It only takes on a definite position in space when we actually try and measure it.

This interpretation is amazingly successful; coupled with the mathematics developed for the quantum theory (the Schrödinger equation, and so forth) it can reproduce and explain the behavior of most atomic and subatomic systems. However, the wave hypothesis raises many more deep questions! What, exactly is a “measurement”? How does a wavefunction “collapse” on measurement? If all particles are waves, why don’t we see their wave-like (or quantum) properties in our daily lives? Are the properties of a particle truly undetermined before measurement, or are they well-defined but somehow “hidden” from view?

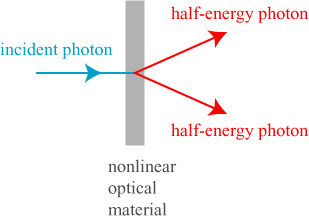

This latter question formed the basis of a famous counterargument to the quantum theory called the Einstein-Podolsky-Rosen paradox, published in 1935. The paradox may be formulated in a number of ways; what follows is a simple model from optics. By the use of a nonlinear optical material, a photon (light particle) of a given energy can be divided into two photons, each with half the energy of the original, propagating in different directions, by the process of spontaneous parametric down conversion.

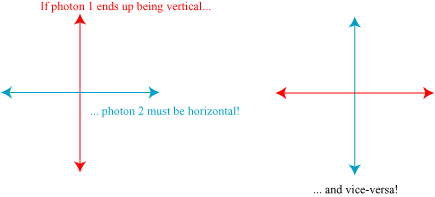

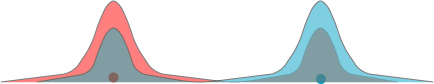

There is an important additional property of these half-energy photons, however; due to the physics of their creation, they have orthogonal polarizations. That is, if the electric field of one photon is oscillating horizontally the other must be oscillating vertically, and vice-versa. However — and this is the important part — nothing distinguishes between the two photons on creation, and nothing chooses the polarization of one or another. Just like the position of the electron in Young’s double slit experiment is genuinely undetermined until we measure it, the polarization of the photons is undetermined until we make a measurement. Nevertheless, there is a connection between the two photons: we don’t know which one has which polarization, but we know for certain that the polarizations are perpendicular. If we were to look at the photon polarization head-on, we might see something of the form shown below:

The photons are said to be entangled; though their specific behavior is undetermined, the physics of their creation still forced a relationship between the two.

Here’s where E, P & R felt there was a paradox: suppose we point our photons to opposite ends of the galaxy. If undisturbed, they remain in this entangled state and can in principle travel arbitrarily far away from one another. Now suppose we measure the polarization of one of the photons, and find the result is vertical; we’ve collapsed the wavefunction, and we now know with certainty that the other photon, at the other end of the galaxy, must be horizontally polarized. By measuring the polarization of one photon, we’ve automatically determined the state of the other one; apparently this wavefunction collapse must happen instantaneously, faster than even the speed of light!

This idea of entanglement and its “spooky action at a distance” was intended to demonstrate the ridiculousness of the Copenhagen interpretation of the quantum theory, but in fact it has been verified in countless laboratory experiments. Furthermore, E, P & R’s counter-explanation — that the polarizations of the photons are well-defined on creation, just “hidden” — has been demonstrated to not be true (though intriguing loopholes remain). It has also been shown that entanglement is consistent with Einstein’s special relativity. Although the collapse of the wavefunction can occur instantaneously, it is not possible to transmit any information this way, due (in short) to the random nature of the process.

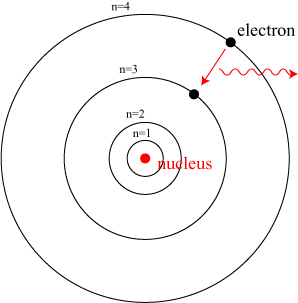

We’ll get to the relevance of entanglement in a moment; we still need one more piece of the puzzle before we can discuss the “everything is connected” video, namely Pauli’s exclusion principle. As we have noted, the introduction of the quantum theory answered many questions, but raised many more. Among other things, the quantum theory predicts that electrons exist only in particular special and discrete “orbits” around the nuclei of atoms. This idea was first introduced in the Bohr model of the atom, as illustrated below:

An electron in a hydrogen atom can only exist in certain discrete stable orbits, labeled in this picture by the index n. Light is emitted from an atom when it drops from a higher energy (outer) orbit to a lower (inner) orbit. The existence and nature of these discrete orbits is explained by the wave properties of matter: electrons form a “cloud” around the nucleus, rather than orbiting in a well-defined manner.

But the wave nature of matter also raises a new problem: electrons are now somewhat “squishy”! In larger atoms with multiple electrons orbiting the nucleus, it was readily found that only a finite number of electrons can fill each orbital position/energy level. One is naturally led to wonder why all the electrons don’t just fill the lowest energy state of the atom, the “n=1” state; because the electrons are wavelike and “squishy”, there doesn’t seem to be anything prohibiting this.

This was one problem that Wolfgang Pauli (1900-1958) concerned himself with. The answer he developed became known as the Pauli exclusion principle: no two identical fermions can occupy the same quantum state. “Fermions” include electrons, protons and neutrons: the constituent parts of ordinary matter. Under the Pauli principle, electrons cannot all pile into the ground state of an atom. Because electrons possess intrinsic angular momentum (“spin”) which can either be “up” or “down”, and this is part of the electron’s quantum state, two electrons can fit in the ground state with the same energy but with different spins.

Keep in mind that the Pauli principle applies to the complete state of an electron; this potentially includes its energy, its momentum, its spin, and its position in space. Any property of a pair of electrons that can be used to distinguish them counts against the exclusion principle.

Now we’ve hopefully got enough information to understand what Cox is trying to say in the video linked above. Let’s dissect it one step at a time:

For example, in this diamond, there are 3 million billion billion carbon atoms, so this is a diamond-sized box of carbon atoms. And here’s the thing, the pauli exclusion principle still applies, so all the energy levels in all the 3 million billion billion atoms have to be slightly different in order to ensure that none of the electrons sit in precisely the same energy level; Pauli’s principle holds fast.

This is a well-known and accepted property of matter. The electrons in a piece of bulk material are all “squashed together”, just like the multiple electrons in a complex atom are all squashed together. In an individual atom, the electrons must stack up into the different quantum states (different energies, different spins) that are permitted by the electron/nucleus interaction. In a bulk piece of crystal, a similar argument applies: there are a large number of permissible quantum states allowed, in which electrons are “spread out” over the size of the crystal; Pauli’s principle indicates that each electron must be in a different state, and they end up filling a “band” of energies.

But it doesn’t stop with the diamond, see, you can think that the whole universe is a vast box of atoms, that countless numbers of energy levels all filled by countless numbers of electrons.

Here’s where things start to go off the rails for me, and it seems like a dirty trick is being pulled. In a crystal, there are a large number of strongly-interacting electrons packed together, and it is natural — and demonstrable — that the wavefunctions of the electrons spread out over the entire bulk of the crystal, with the sides of the crystal forming a natural boundary. But jumping to the cosmological scale, we don’t “see” electrons whose wavefunctions stretch over the extent of the universe — our experiments show electrons localized to relatively small regions. Even if we treat the universe as a big box — and it’s unclear that this is even a reasonable argument to make — the behavior of electrons in the “universe box” is really, fundamentally different from the behavior of electrons in a “crystal box”. I think that Sean Carroll over at Cosmic Variance was saying something very similar when he notes, “but in the real universe there are vastly more unoccupied states than occupied ones.” That is: in a crystal, the electrons are “fighting” to find an unoccupied energy level to occupy, like a quantum-mechanical game of musical chairs. Over the entire extent of the universe, however, there are plenty of open energy levels — much like finding chairs at a Mitt Romney event in Michigan.

So here’s the amazing thing: the exclusion principle still applies, so none of the electrons in the universe can sit in precisely the same energy level.

Now we’re really getting into trouble. In a crystal, where the electrons are all essentially “smeared out” over the volume, the energy levels must necessarily split. But electrons in the universe don’t seem to be smeared out in the same way. It would seem to me that electrons separated widely in space — around different hydrogen atoms on opposite ends of the universe, for instance — would be perfectly well distinguished by their relative positions, and not need to have energy level splits. More on this in a moment.

But that must mean something very odd. See, let me take this diamond, and let me just heat it up a bit between my hands. Just gently warming it up, and put a bit of energy into it, so I’m shifting the electrons around. Some of the electrons are jumping into different energy levels. But this shift of the electron configuration inside the diamond has consequences, because the sum total of all the electrons in the universe must respect Pauli. Therefore, every electron around every atom in the universe must be shifted as I heat the diamond up to make sure that none of them end up in the same energy level. When I heat this diamond up all the electrons across the universe instantly but imperceptibly change their energy levels. So everything is connected to everything else.

Now the explanation has actually made the leap into being simply wrong! We have noted that, with tangled quantum mechanical particles, it is possible to instantaneously modify the wavefunction of one of the entangled pair by manipulating (measuring) the properties of the other. But, as we noted, nothing physical can be transmitted at this faster-than-light wavefunction collapse. Cox specifically says in this lecture that heating of electrons in his piece of diamond instantly changes the energy levels, i.e. the energy , of the electrons across the universe! A change in energy is a physical change of a particle, and this is specifically forbidden by the laws of physics as we know them.

Another thought came to me as I was reading this, and I found that it was already stated by Tom over at Swans on Tea. If all the electrons in the universe necessarily have different energies, then they are always in different quantum states — the Pauli exclusion principle would become irrelevant! It would seem to imply that we could pile an arbitrary number of electrons in the ground state of a hydrogen atom, although they would have slight indistinguishable energies. Obviously, we don’t see this. There may be a problem with this argument, as well, but it illustrates (as Tom says) that broad-reaching statements about atomic energy levels end up having potentially more implications than one would at first think.

As stated, Cox’s argument is really incorrect, and violates relativistic principles. One can argue, in his defense, that this is a consequence of trying to simplify things for a popular audience, and that he really meant something a little more subtle. However, on an undergraduate physics page he makes a similar argument, and linked to it in defense of his lecture.

Imagine two electrons bound inside two hydrogen atoms that are far apart. The Pauli exclusion principle says that the two electrons cannot be in the same quantum state because electrons are indistinguishable particles. But the exclusion principle doesn’t seem at all relevant when we discuss the electron in a hydrogen atom, i.e. we don’t usually worry about any other electrons in the Universe: it is as if the electrons are distinguishable. Our intuition says they behave as if they are distinguishable if they are bound in different atoms but as we shall see this is a slippery road to follow. The complete system of two protons and two electrons is made up of indistinguishable particles so it isn’t really clear what it means to talk about two different atoms. For example, imagine bringing the atoms closer together – at some point there aren’t two atoms anymore.

You might say that if the atoms are far apart, the two electrons are obviously in very different quantum states. But this is not as obvious as it looks. Imagine putting electron number 1 in atom number 1 and electron number 2 in atom number 2. Well after waiting a while it doesn’t anymore make sense to say that “electron number 1 is still in atom number 1”. It might be in atom number 2 now because the only way to truly confine particles is to make sure their wavefunction is always zero outside the region you want to confine them in and this is never attainable.

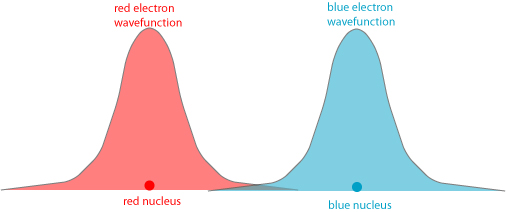

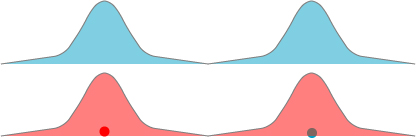

We can try and explain this more elaborate and detailed argument pictorially for two electrons. What Cox seems to be espousing here is essentially that two electrons naturally evolve into an entangled state after some period of time. How this would work: we start with two electrons (labeled “red” and “blue” for clarity, though they are in reality completely indistinguishable particles) surrounding two different hydrogen nuclei. Let us suppose they start spatially separated, as shown below:

Because the red electron’s wavefunction stretches into the domain of the blue atom, and the blue electron’s wavefunction stretches into the domain of the red atom, as time goes on it becomes increasingly likely that the red and blue electrons have switched places. The wavefunctions may evolve to something like this:

Eventually, after sufficient time has passed, each electron is equally likely to be in either atom, which we crudely sketch as:

That is, we expect the wavefunctions to be identical for the two electrons. But they can’t be identical, according to the Pauli principle! Therefore something else must shift in the wavefunctions to make them distinguishable — one ends up with a slightly higher energy, one ends up with a slightly lower energy.

What we’ve got here is what I imagine would be considered a form of entanglement: we know with certainty that there is only one electron around each nucleus, but the specific location of either is undetermined.

This idea isn’t particularly controversial: this is essentially what happens in crystals, as we have discussed, and what happens to the electrons in molecules or otherwise interacting atoms. But this is just a description for two atoms — can we make the same sort of arguments on a universal scale? Here I have two problems. The first is that there are too many questions that get raised when trying to extend this to this degree, among them:

- Time. How long does it take to get such an entanglement between two electrons? For two electrons next to one another, I imagine it would be nearly instantaneous, but for two electrons separated by light-years? I’m guessing the period of time is very, very large, which brings me to my next point…

- Stability. It is not easy to produce significantly entangled photons in the laboratory, and it is hard to maintain that entanglement. Keeping two particles entangled for long periods of time is experimentally nontrivial, due to external interactions with other particles: in essence, our quantum system is being continually “measured” by outside influences. Do widely separated electrons ever form an appreciable degree of entanglement? Completely unclear, and rather doubtful.

- Infinity. Part of the argument for this universal entanglement is built on the idea that the spatial wavefunctions of electrons are of infinite extent, i.e. they are spread-out throughout all of space. Indeed, stationary (definite energy) solutions of the Schrödinger equation are infinitely spread out, but I would use a lot of caution to make concrete conclusions from that observation. In optics, classical states of “definite energy” are monochromatic waves, which are used all the time to make optics calculations convenient. It follows from the mathematics that monochromatic waves are always of infinite extent, just like wavefunctions, but here’s the thing: nobody with any sense in optics assumes that this infinite extent is a physical behavior that one should derive concrete physical conclusions from. A monochromatic wave is just a convenient idealization of the real physics***.

A natural question to ask at this point: isn’t physics all about deriving general conclusions from simple physical laws? Why are you being more cautious with Pauli, and quantum mechanics, than you are with, say, gravity and electromagnetism? Part of the difference is, as we have noted above, that we simply do not understand the quantum theory well enough to derive boldly such universe-wide conclusions. An even more important difference, though, is that I can see the universal consequences of gravitation and electromagnetism experimentally, whereas it is not clear what consequences, if any, this “universal Pauli principle” provides. Which brings me to my final observation; returning to Cox’s lecture notes:

The initial wavefunction for one electron might be peaked in the region of one proton but after waiting for long enough the wavefunction will evolve to a wavefunction which is not localized at all. In short, the quantum state is completely specified by giving just the electron energies and then it is a puzzle why two electrons can have the same energy (we’re also ignoring things like electron spin here but again that is a detail which doesn’t affect the main line of the argument). A little thought and you may be able to convince yourself that the only way out of the problem is for there to be two energy levels whose energy difference is too small for us to have ever measured in an experiment.

Emphasis mine. HOLY FUCKING PHYSICS FAIL. Here Cox explicitly acknowledges that his “universal Pauli principle” consequences are something that not only cannot be measured today, but in principle can never be measured, by anyone.

THEN WHY THE HELL ARE WE TALKING ABOUT IT??!!

At its core, physics is all about experiment. Experimental tests of scientific hypothesis are what distinguish physics (and all science, really) from general philosophy and, worse, mysticism and pseudoscience. Consider the application of Cox’s conclusion to a few other situations:

- Astrology is the influence of the stars upon human beings via quantum mechanical influences whose energy difference is too small for us to have ever measured in an experiment.

- Homeopathy is the lingering effect of chemical forces on water via quantum mechanical changes to the water whose energy difference is too small for us to have ever measured in an experiment.

- The human soul exists materially in the quantum wavefunction of a human being, manifesting itself in changes whose energy difference is too small for us to have ever measured in an experiment.

In my eyes, there really is not much difference between various pseudoscientific shams being propagated in the world today and the logical argument of a “universal Pauli principle”. (When I mentioned this argument to a colleague, he said, “Ask him how many angels dance on the head of a pin.“) In a sense the whole discussion of this blog post has been a waste of time: my theoretical counterarguments may be reasonable or they may not; we can never draw any conclusion about the reality of this universal principle because it lies outside our ability to ever detect it.

I tend to be rather forgiving of using simple, arguably misleading, models to introduce physical principles. For instance, I’m a defender of the use of the Bohr model as a good tool to expose students to quantum ideas in a simple and historical way. My criterion, however, is this: a model or explanation must, as a whole guide students in the right direction towards the greater “truth”, such as it is in science. The “universal Pauli principle” fails this on two parts: it gives a false impression of the importance of completely unexperimental conclusions, and it opens the door to pseudoscientific nonsense. Nevertheless, Cox doubled down on his statements in a Wall Street Journal article, somehow arguing that his original argument is a necessary evil in a world where the public needs to be excited about science.

In a sense, though, we have ironically come full circle on Pauli. It was none other than Wolfgang Pauli who coined the phrase “not even wrong” to describe theories that cannot be falsified or cannot be used to make predictions about the natural world. It has been most recently used to describe string theory, with the argument that the predictions of string theory cannot be tested with any experimental apparatus that exists. However, string theory can at least in principle be tested, albeit not today, where it seems that the “universal Pauli principle” described by Cox has no measurable consequences, in principle, and is immune to any test imaginable. It serves no useful purpose in the world of physics, and as we have noted there are many objections to it actually working the way it is advertised to work.

I was recently thinking of the many advantages to the explosion of science communicators on the internet, and one that struck me is that we no longer have to rely on a single or a small number of “authority figures” to tell us what is right and wrong in the scientific world. This entire fiasco emphasizes how important this new abundance of voices will be in an ever more complex universe.

With no hypothesis to test, and no measurable consequences for science, I conclude my thoughts on the “universal Pauli principle”.

Requiescat in pace,

“omnia conjuncta est”¹

**************************

* If someone wants to get in a pointless pissing match of who is more of an “armchair physicist” based on CVs, though, I’m your huckleberry.

** P.G. Merli, G.F. Missiroli and G. Pozzi, “On the statistical aspect of electron interference phenomena,” Am. J. Phys. 44 (1976), 306-307.

*** Curiously, in arguing against the use of the spatial distribution of a quantum wavefunction in providing “distinguishability” of electrons, Cox uses a “momentum eigenstate” — a particle of perfectly specified momentum and infinitely uncertain position. This is pretty much the equivalent of a monochromatic plane wave in optics, which again nobody would use as a realistic example of how the world works.

¹Thanks to Twitter folks for suggesting the translation of the latter phrase. Alternate translation: “omnes continent”.

*********

Postscript: A couple of friends (including @minutephysics) have pointed out that none of the discussions so far have included quantum field theory, which makes things even more complicated (non-conservation of particle number, for instance).

Bellum omnia contra omnes?

Ha! Apparently we’ve reached that stage.

So it has come to this.

http://forums.xkcd.com/viewtopic.php?f=7&t=81116

Also, I suppose that sign off makes you the Ezio Auditore of physics blogging?

Oooh, I like that characterization! >:)

This post rocks on so many levels…though, I remain skeptical as to the degree with which it rocks.

Hmmmm. This is interesting stuff. I don’t buy Prof Cox’s argument, but I don’t buy your entire rebuttal above.

It’s hard to argue against the infinite extent of the wavefunction of a particle. Forget about Pauli for a moment, and forget about crystals. Let’s talk about a massive bunch of Hydrogen atoms a billion light years away underoing fusion and releasing a massive bunch of high-energy photons. Is the probability that one of these photons will interact with the wavefunction of the detector elements in one of our super duper telescopes (a billion years later) vanishingly small? No, we can see them with a big enough telescope.

What about if there were half as many? A tenth? A hundredth? A millionth? A 1/6.0221415 × 10^23th?

What if there were just two Hydrogen atoms?

We are left with the conclusion that the wavefunction never truly deteriorates to zero. There is no magic cut-off point. Strap enough similar events together, and they will have a measurable effect (eventually) on the other side of the universe, proving the non-vanishing probability of the constituent events.

As for Pauli’s exclusion principle, it’s my understanding (I’m no expert) that this applies to electrons only after they have become bound to the conglomeration of orbitals making up Prof Cox’s crystal. So an electron with a peak probability of being found on the other side of the Universe is not going to concern itself with taking on a unique energy level unless it comes bound.

I would maintain that it DOES have a finite chance of becoming bound though, no matter how far away it is. Of course, its ‘probability wave’ would first need to have travelled at the speed of light to reach the crystal in the first place.

Right??

Phil: thanks for the comment!

Admittedly I’m a little unsure about the wavefunction extent, though it seems that, in a very simple sense, it must still be subject to relativistic effects. Otherwise, there is a non-zero probability that an electron in a definite position (after measurement) here is instantaneously on the other side of the universe. There are a lot of other arguments that could potentially be made, though, especially once one brings in the full relativistic field theory, so I can’t be sure that some other complications involving many-particle interactions mucks things up.

Your hydrogen atom argument has a problem, in that when you talk about the photons being emitted by a hydrogen atom, you’re talking about the “wavefunction” of the photon, not the wavefunction of the hydrogen atom! There is certainly the possibility of long-range interaction via photons and presumably gravitons, and presumably there is a long-range correlation between the atom and the photon, but this doesn’t imply the atom’s wavefunction itself has “gone the distance”.

That seems to be part of the problem, provided you assume that the electrons were measured in a definite state at some point of their existence, as noted above!

The point was to illustrate that current QFT does not impose any limit on the spatial extent of a free particle’s wavefunction. I am not sure whether being bound to atomic matter is supposed to make the wavefunction actually go to zero (as opposed to just really small) outside a certain zone… but if not, I still can’t fault that aspect of Prof Cox’s argument (I still don’t buy the non-local part though).

You provided a general explanation about a whole lot of things, and I want to use my imagination from that.

As I see it, classical physics stopped making sense around the turn of the 20th century, and over time it was replaced by newer stuff, notably quantum mechanics. The new view was a statistical one which was never intended to make sense. Statistical ideas are notoriously confusing, and people get confused about causality, the extent that statistical results apply to individual cases, etc.

I’m curious whether an alternative classical view can make sense. QM would still apply to statistical experiments — probably all of them — but with a model that made sense it might be easier to apply QM.

[quote]”An example of the difficulties is provided by the electron double slit experiment. Individual electrons passing through the slits don’t produce waves; they arrive at a discrete and seemingly random points on the detector, like a particle. However, if many, many electrons are sent through the same experiment, one finds that the collection of them form the interference pattern.”[/quote]

This looks like a good place to start. They argued since Newton whether light was made of particles or waves. Then we got the same results with electrons. Ideally we could get a model which explained everything which looks like partides as waves, and everything which looks like waves as particles. Then we could choose either approach and make it work.

The electron detectors detect quanta. Either there is a detection or there is not. So even if the electrons were behaving exactly like waves, they would be detected as particles.

Is there a way that particles could diffract like waves? I will describe one way that could happen. I don’t claim it could happen this way with electrons, but if there is one way that particles can do it, there might be another way that actually fits the data. So any way that gets particles to diffract is a start.

First, I need particles that spin in unison. The experiment is set up so they all spin clockwise around their up axis. And they are somehow asymmetrical so it matters how far along they are in their rotation. A particle turned around to 90 degrees isn’t the same as one that has rotated to 270 degrees.

And then we have limited detectors. I imagine a detector which can’t detect a single particle but only the sum of say 6 particles. If it gets 6 particles in a row with spin between 0 and 180 degrees, it registers a hit. But if any one of the 6 is between 180 and 360 degrees, the hit is lost and it then will register a hit if there are 5 more between 180 and 360 degrees.

The paticles go through a slit and on to the detectors. When they go through the slit their directions are randomized, but their spins are still synchronized, each one is at 0 degrees then. At some distance to the left side, particles that come near the right side of the slit will travel farther than particles that come near the left side of the slit, and they will rotate 180 degrees out of phase. All of them will be between 0 and 180 degrees. So there will be lots of detections. A bit farther to the left, half of them will be one kind and half the other. There will be very few detections. Etc.

With the right kind of particles and the right kind of detectors, you can get diffraction.

It does not matter that only one particle goes through the slit at a time, provided the detector state changes whenever the next particle arrives.

Particles can appear to diffract. There are probably multiple ways to get diffracting particles. Perhaps one of them might fit the data for light or for electrons.

Thanks for the comment! Actually, the wave nature of particles seems pretty airtight at this point, especially after the theoretical work of John Stewart Bell on Bell’s inequalities and the experimental verification of this, which strongly demonstrates that no “local” theory of particle behavior can reproduce the observed properties of quantum mechanics.

It’s interesting to note, however, that in the history of optics, physicists were even able to explain interference effects and polarization effects to their satisfaction using a particle theory. It was only after diffraction was successfully explained using waves, and led to verifiable predictions, that the wave theory really took off.

I can explain diffraction myself using particles, given a fistful of assumptions. I’m not sure I have the distribution right but I can get the distribution right.

If there are two hypotheses that both explain the phenomenon, how do you decide which to accept? I say, as long as both explain the phenomenon there is no need to decide which to accept.

“Actually, the wave nature of particles seems pretty airtight at this point, especially after the theoretical work of John Stewart Bell on Bell’s inequalities and the experimental verification of this”

I have not studied the details of Bell’s theorem carefully enough to judge it, but I rather doubt it. I’ve seen this sort of thing a lot in probability theory. Start with a collection of assumptions, one of which is wrong. Reason from the assumptions to a conclusion that looks astounding. Argue that the astounding conclusion must be true. But usually one of the original assumptions was wrong instead. Typically people assume that their sampling is not biased, for example, and it almost always is.

” It would seem to me that electrons separated widely in space — around different hydrogen atoms on opposite ends of the universe, for instance — would be perfectly well distinguished by their relative positions, and not need to have energy level splits.”

Peierls also discusses this in his “Surprises in Theoretical Physics” in the context of two electrons at the opposite end of a metre-long metal bar. His conclusion is the same as yours – that if the electron-states are spatially localised to opposite ends of the bar then, by definition, they are in distinct quantum states and the Pauli principle is automatically satisfied regardless of their energy. Iirc he takes the argument further to make some quantitative estimates, but I forget the details. I like the Peierls example better than the “whole universe” discussion because i) one can imagine scaling smoothly from a small metallic crystal to a macroscopic metal bar and ii) a one metre bar is effectively as big as the universe anyway, seen on the quantum scale.

Thanks for the comment! I haven’t seen Peierls’ discussion, but I’ll have to look it up…

OK, I’ve seen the video, the blog response, and the comments on that blog. I have some conclusions.

1. If you want somebody to dispassionately discuss science and ways to improve his presentation of science, do not title your criticism “Brian Cox is full of **it”. That does not promote careful dispassionate thought, at least among primates. A primate that sees this will tend to interpret it as poo-flinging, and will tend to fling poo back.

2. Quantum Mechanics (QM) is not intuitive. It is similar to statistics and probability theory that way. There is a mixup between what’s true about the reality, versus what you know about it. We describe our knowledge and our ignorance and then look at what we still know when things change. The transformation rules are complex and unintuitive. It’s hard.

Ideally we design our language to make it easy to think about stuff. The language does some of the thinking for you. Stupid things sound wrong. We’re far from that with QM. The truth sounds wrong. Stuff that sounds plausible pretty much has to be wrong — if it sounds right then it can’t be right.

We try to design our mathematics so that the right answers just fall out easily. This has mostly not been done for QM yet. It’s hard to do the math right.

Given the problems, doesn’t it make sense to display a whole lot of tolerance?

3. Various people say that the discussion is all first-year stuff. But they disagree. It looked like they chose sides quick enough, and they agree with other people on the same side. But I strongly suspect that for many simple first-year problems stated in simple english, 10 physicists would give 5 or more answers. This stuff is *hard*. Figuring out what the question is when it’s stated in English is even harder.

More later.

This is not really off topic.

One time a friend told me that the Monty Hall solution was wrong. He argued it out.

He said, suppose you come look at the Monty Hall problem at the last minute. There are two doors that aren’t open and one that is open. The right answer is one of the two doors. The probability is .5 that it’s either one. How is that different from the guy who saw the door get opened? For him it was 1/3 for each door, and then he saw that it wasn’t door A. He knows the same thing you know, it’s one of the two that are left. So it’s 50:50.

It took me weeks to persuade him. I wrote a short computer program to model the problem, and showed him the answer came out 2:1. He said I must have done it wrong.

I tried to tell him that the guy who saw the door opened does know something the other guy doesn’t know. That different people can come up with different statistics, and both be right as far as they know. “No, there’s a real probability and if somebody guesses wrong what it is that just means they’re wrong.” I guess he was right on that one, but I was right too.

I finally showed him that Monty was not behaving at random. If Monty instead opened any of the three doors no matter where the prize was, and the game was over if he opened a door that had the prize, then it did come out 50:50 between the two that were left. But it wasn’t easy to show him how that mattered.

Probability theory is *hard*. Professionals get it wrong sometimes. Tiny details can change the whole problem. And QM is inherently probabilistic.

If you have to argue about it, for gods sake don’t do it in English. You haven’t got a chance unless you show the math. And yet, that’s so very tedious….

Indeed, that’s perhaps the major issue with all of this discussion — there’s no way to mathematically model the wavefunction properties of the entire universe to draw the conclusions being made! Even worse, it was conceded in the original talk *and* in the undergraduate lecture that the conclusions being drawn have absolutely no observable physical consequences! I can almost — almost — understand making such assertions in a popular physics lecture, provided they’re couched in appropriate caveats (“it is possible to view this result of having the surprising consequences of…”), but telling undergraduate students that unmeasurable, unprovable effects of no consequence are important is really, really doing a disservice to people who want to be physicists.

If I gave a talk at a physics meeting where I said, “the following hypothesis has no consequences for physics, explains no unanswered questions and cannot be detected ever”, I would rightly be tarred & feathered, at least metaphorically.

Even worse, it was conceded in the original talk *and* in the undergraduate lecture that the conclusions being drawn have absolutely no observable physical consequences!

I think I’ve seen this before, though I have no idea where to find links.

A long time ago people believed that electric fields and gravitational fields were instantaneous. And somebody in a lecture to laymen said that this meant that everything you did would have some instantaneous effect, no matter how tiny, out to the farthest star.

But then they decided that those fields take time to act. So somebody making a similar lecture said that everything you did would eventually have some effect, no matter how tiny, out to the farthest star.

And now here’s somebody saying the same thing from QM. It doesn’t sound like it means anything beyond feel-good talk to laymen.

….but telling undergraduate students that unmeasurable, unprovable effects of no consequence are important is really, really doing a disservice to people who want to be physicists.

Oh. Physics undergraduates. Hmm. Are they physics undergraduates who do the math? If so, it probably won’t hurt them much at all.

Are they physics undergraduates who don’t do the math? Then they’re laymen who aren’t really learning much, and it won’t matter until they learn the math.

I swear, when I squint a little and let the details fuzz out, this seems a whole lot like arguments I used to hear Baptists make.

“Did you hear him? He said it was OK to try to follow Jesus Christ’s example!”

“What’s wrong with that? Jesus said to follow him, didn’t he?”

“But nobody can be like Jesus, Christ was God and sinful human beings can’t be God.”

“Well, what’s wrong with trying?”

“Wash your mouth out with soap! If you try to be like Christ you’re guilty of the sin of pride! You don’t understand the least little thing about theology and you have the nerve to ask questions! This idiot was telling people it was OK to be sinful! He told them to try to follow Jesus Christ’s example, and you don’t even see what’s wrong with that! You’re just ignorant, but he’s preaching evil and he hasn’t the right!”

If you debate you will argue 🙂

No news there, the problem comes when one gets a little to enthusiastic in ones arguments . I like your blog for several reasons, open mindedness, a urge to try to present it right, and very good knowledge of what you discuss. And I don’t mind either of you guys getting it ‘wrong’ now and then. That’s what open minds do, they speculate and find connections and insights, sometimes wrong but even when right forced to present in a painstakingly sound mathematical notation, as stringently and clear as possible. Einstein took a lot of ‘wrong turns’ in his hunt for relativity, but he got it right in the end, and also had to invent/learn new ways of presenting it mathematically as I remember it. So ‘fight’, with a smile, life is too short to take it seriously

Isn’t it so that in a Big Bang it’s possible to assume a ‘entanglement’ of it all? If I combine this with a later ‘instant’ inflation creating ‘distances’, then all particles ‘untouched’ by their isolation still could be entangled? Eh, maybe 🙂

Also it seems to me as if both a wave function and relativity questions ‘distance’, although from different point of views naturally? Not that I doubt the concept, ‘distance’ is here to stay, but what does it mean? And now I’m weirder than any of you 🙂

I found it quite nice reading and I hope that you, as well as all other guys involved, get something fruitful out of your discussion in the end.

I agree that there will always be some arguing! My PhD advisor is one who essentially told me, “When we discuss physics we will get into terrible fights. That’s okay, though, because we’ll all be friends in the end.” In fact, I had some knock-down, drag-out fights with him over physical principles (metaphorically speaking, of course), and we’re still good friends!

There’s no doubt that Tom’s original post was rather tactlessly titled (as J Thomas also noted below); however, Tom’s post raised some valid questions, and certainly my comment was about as polite as one could be (“eyebrow-raiser” doesn’t seem to me to be a horrific insult). The petty sniping that Cox responded with to me and Tom (not understanding undergraduate physics) did not forward the discussion at all, and was really just mean-spirited. And that is the sort of thing that pisses me off. >:)

Yeah, things happen.

I looked at Swans on Tea:s blog too 🙂

What’s good with a discussion like this one is that people present their ideas, and interpretations, and so put a lot of otherwise strange concepts into different ‘lights’, making me see how it is thought too work in new ways. Sort of ‘holistic perspective’ reading the comment section there, at least for me. And both you and Swans belong to my favorite bloggers.

Just keep on 🙂

Thinking of it. The definition of Entanglements are truly confusing, maybe you have discussed it? Probably you have, and I seem to think I get what it should be at times, and then, some year later, I find myself wondering if I’ve understood the definition of a entanglement at all?

You have the simple way by down converting a photon into two. That one is easy to understand. But then you have thingies ‘bumping’ into each other for example, sending momentum into each other, and of course the ‘indistinguishable electrons’ etc.

And to make my headache even worse you can also find those defining it as if you have a entangled ‘pair’ there can be no ‘wave function’ breaking down, until both are measured, if I now got that one right? Been some time since I discussed that.

It’s worth going through, if you haven’t?

I need to write a more detailed post on quantum stuff, perhaps as a “basics” post or two. In entanglement, though, the idea is that the wavefunction breaks down as soon as one of the particles is measured. This results in the “instantaneous collapse of the wavefunction” that so upset Einstein, Podolsky and Rosen. I’ll go into it in more detail soon; in the meantime, you can check out the post on entanglement at Galileo’s Pendulum.

Thanks.

this is my view, and I’m trying to keep it simple. “as I said a description I like was the one of ‘one particle’. I can go with a ‘wave function’ describing it too though, as long as we then assume it to be in a pristine ‘superposition’ prior to the measurement, with ‘both sides’ falling out in the interaction/measurement, no matter if the side not making that initial measuring, will measure it later, or not.”

And here is DrChinese view “Nope, generally this is not the case (although there are some complex exceptions that are really not relevant to this discussion). Once there is a measurement on an entangled particle, it ceases to act entangled! (At the very least, on that basis.) So you might potentially get a new entangled pair [A plus its measuring apparatus] but that does not make [A plus its measuring apparatus plus B] become entangled. Instead, you terminate the entangled connection between A and B.

You cannot EVER say specifically that you can do something to entangled A that changes B in any specific way. For all the evidence, you can just as easily say B changed A in EVERY case! This is regardless of the ordering, as I keep pointing out. There is NO sense in QM entanglement that ordering changes anything in the results of measurements. Again, this has been demonstrated experimentally.

My last paragraph, if you accept it, should convince you that your hypothesis is untenable. Because you are thinking measuring A can impart momentum to the A+B system, when I say it is just as likely that it would be B’s later measurement doing the same thing. (Of course neither happens in this sense.) Because time ordering is irrelevant in QM but would need to matter to make your idea be feasible.”

And if I get the idea right here? You might say that it’s a consequence of SR, and the possibility of different observers getting different ‘time stamps’ for ‘A’ relative ‘B’, so there might be no ‘universal order’. Instead it will be defined locally. Which is a very interesting thought, if correct. At least it’s the way I interpret it for now 🙂

I will follow that link.

Imagine that you and your girlfriend make an agreement. You take the king of hearts and the queen of diamonds out of a deck of cards. You shuffle them around so nobody knows which is which, and you seal them into two envelopes. You each keep one of them, and you agree that in 30 years you’ll open the envelopes and look at them. It’s a romantic gesture. But 5 years later she dies and she asks that her envelope be buried with her. After 30 years you open your envelope and see the queen of diamonds. You immediately know that her envelope has the king of hearts. But how can you know that? You haven’t dug up her grave and opened her envelope.

The difference between this and Bell’s theorem is that Bell’s theorem says that in the QM case, the decision which card was which could not have been made when the envelopes were sealed. That decision was made when one of the envelopes was opened. And at that time two things changed, two things that might be light years apart or buried in separate graves.

Probability theory doesn’t distinguish between things that have been decided — but are unknown– versus things that have not been decided yet. Somebody flipped a coin yesterday and you don’t know which face came up. Somebody will flip a coin tomorrow. Either way, assuming a fair coin, your best guess is 50% either way. If you have reason to think it’s a false coin that comes up heads 55% of the time, then your best guess is 55% heads either way. Once you find out the truth, then it isn’t 50% or 55%, you know. It’s either heads or tails.

The easy interpretation is that it doesn’t matter whether something is real but unknown versus not-decided-yet. Either way, you have your best guess now and when you find out the truth you’ll know. There’s no point arguing whether really the cards are separated and in their envelopes, or whether a ghost magically paints the cards just before you open the envelopes, because there’s no way to tell which it is. Just use what you do know, which is first the guess and then the reality.

But Bell’s theorem proves that it cannot be true that the truth is real but unknown. It has to be true that the state does not exist until two random but correlated states are created when one of them is observed. Without that proof, there is nothing special going on.

Somehow, quantum mechanics is arranged so that it is impossible for the truth to exist but be unknown. That’s the part that’s hard to understand. What we need is a good simple explanation why, for example, two photons that are created to have complementary polarization but we don’t know what polarization they have, are not actually polarized any way in particular until we measure the polarization on one of them. QM says it can’t be true that their polarization state was set when they were created, and we only discover it later. QM says that in reality they have only a probability of polarization until it becomes real when one of them is measured.

Why does QM make it impossible for the truth to be real but unknown?

A nice description J 🙂

And one that I agree too, and actually can understand. Your definition has been proven a lot of times, that even though you know that there will be a complementary ‘polarization’ for ‘B’, you can’t define it until measuring ‘A’, or vice versa. As you can’t know what the polarization will be for any of the space separated objects until measuring on one of them, only that they will be opposite. That if I got you right?

DrChinese comments it “So you might potentially get a new entangled pair [A plus its measuring apparatus] but that does not make [A plus its measuring apparatus plus B] become entangled. Instead, you terminate the entangled connection between A and B.

You cannot EVER say specifically that you can do something to entangled A that changes B in any specific way. For all the evidence, you can just as easily say B changed A in EVERY case! This is regardless of the ordering, as I keep pointing out. There is NO sense in QM entanglement that ordering changes anything in the results of measurements. Again, this has been demonstrated experimentally.”

And it’s there my headache begins, although physics is on the whole a headache, mostly nice though. If you have a wave function describing a ‘particle’ or an entanglement, it must be your observation that ‘sets’ it. And if what you observe is separated in space, then what you observe of it should set it all. But I’m getting an impression of that a ‘space like’ separation, as in this entanglement case, now allows me to state that no matter what I observe, ‘A’ or ‘B’ I could define it such as the observation I do has nothing to do with what ‘sets’ what.

That’s why I like the ‘one particle’ definition better, because in that one it becomes meaningless to discuss ‘causality chains’ as in such a definition that ‘wave function’ is a whole object, in which you ‘instantaneously’ set a state for ‘both’ .

But then I have SR of course, but it shouldn’t really matter there, should it? As no matter what ‘time stamp’ different observers will give, ‘A’ or ‘B’ first, it still have to be ‘one particles wave function’ getting set?

Then again, I really need to look at it from first principles, and see if I really get it..

Yoron, the following link (which Dr. Skull provided) is extremely unclear because as a Wikipedia page it incorporates lots of different ideas which disagree.

http://en.wikipedia.org/wiki/Bell%27s_theorem

It does include a quote from Jaynes. He was very good at statistics and probability theory, and had a lot to say about QM as a result.

Causes cannot travel faster than light or backward in time, but deduction can.

Also,

Bell’s inequality does not apply to some possible hidden variable theories. It only applies to a certain class of local hidden variable theories. In fact, it might have just missed the kind of hidden variable theories that Einstein is most interested in.

So, some random things have to happen late, near the time they are measured. But maybe others can happen early, at the time of the entanglement. Which is true in the cases people are interested in? How can we find out?

What I mean is that in some ways the question from SR becomes slightly metaphysical.

Because if ‘the arrow of time’ is a local definition, which I see it as. Then that also will mean that any observation you do must be valid from your frame of reference, just as a Lorentz contraction should be for that speeding muon impacting on earth.

That another frame of reference will define it differently doesn’t invalidate the muon’s frame. But that is from the assumption of ‘time’ always being a ‘local phenomena’, so invalidating the assumption of a ‘same SpaceTime’ for us all time and space wise.

But the ‘arrow’ is always a local definition as far as I can see. Even though you can join any other frame of reference to find the time dilation you observed earlier ‘gone’, from your new ‘local perspective’ in SpaceTime that only state that relative your life span, your clock never changes.

The thing joining SpaceTime is a constant. Lights speed in a vacuum. And that is also what gives us ‘time dilations’ and the complementary Lorentz fitzGerald contractions, well, as I see it 🙂

Hmm, sorry.. That was me explaining myself, not answering your post.

I liked your citation on ‘deduction’. SpaceTime as ‘whole experience’ I see as conceptual, described through diverse transformations between ‘frames of reference’, joining them into a ‘whole, and so also becoming the exact same ‘deductions’ he described.

If a time dilation is ‘true’ from your frame of reference, and differs from my frames observations, which we know to be true through experiments, then locality defines your ‘reality’. and it has to be real if one accept Relativity. And that should mean that radiation is what joins us.

“Bell’s inequality does not apply to some possible hidden variable theories. It only applies to a certain class of local hidden variable theories. In fact, it might have just missed the kind of hidden variable theories that Einstein is most interested in.”

Would you have an example, or link of that? On the other hand you write “Somehow, quantum mechanics is arranged so that it is impossible for the truth to exist but be unknown.”

I like ‘indeterminacy’, as a principle I find it rather comforting, reminding me of ‘free will’, in/from some circumstances. But I also have faith in that we will find a way to fit it into a model where that indeterminacy becomes a natural effect of something else. Wasn’t that what Einstein meant too? Or did he expect ‘linear’ causality chains to rule everything? I’m not sure how he thought of it there? I know he found entanglements uncomfortable in that they contained this mysterious ‘action at a distance’. But Relativity splits into local definitions of reality as I think of it, brought together by Lorentz transformations, describing the ‘whole universe’ we observe through radiations constant. So from my point of view, ‘reality’ and the arrow both follow one constant, and that one will always be a local phenomena. That simplifies a lot of things for me at last, although it makes ‘constants’ into something defining the rules of the game, and the question of a whole unified SpaceTime int something of a misnomer.

Regarding hidden variable theories, the major distinction is between local and nonlocal ones. As I understand it (and I am admittedly not an expert on these controversies, so take this explanation with a grain of salt), EPR concerned itself with the idea of a local hidden variable theory (LHVT): that a particle’s properties such as momentum and spin are well-determined, and spatially localized to the particle. This is the concept that would be consistent with classical mechanics: localized and definite particle properties.

Bell’s theorem suggests that physical experiments *can* tell the difference between a local hidden variable theory and conventional quantum mechanics. Experiments results are inconsistent with the LHVT and consistent with conventional quantum theory, suggesting that an LHVT cannot be correct.

There is still a possibility of a *nonlocal* HVT, however, in which the properties of a particle are definite but “spread out” in space-time in some manner. This is typically done by imagining that a definite particle exists coupled to a “guiding wave” that controls its motion. No experiments have been done to conclusively rule out a nonlocal HVT.

This puts physicists in a bit of a philosophical conundrum: they can either accept QM, which requires throwing away determinism, or they can accept NLHVT, which requires throwing away causality (the theory requires faster-than-light influences between particles). As I understand it, most physicists at this point act under the assumption that conventional QM is the better interpretation, though the question is by no means solved.

It is these sort of controversies, BTW, that make me dubious of any attempt to extend simple quantum postulates to a universal scale without qualification.

“Regarding hidden variable theories, the major distinction is between local and nonlocal ones.”

The big distinction is about causation that happens instantaneously at large distances. That’s spooky. A hidden variable theory that gives you instantaneous causation at large distances is no improvement, and presumably some of those can easily be tuned to give the same result as QM.

“As I understand it (and I am admittedly not an expert on these controversies, so take this explanation with a grain of salt), EPR concerned itself with the idea of a local hidden variable theory (LHVT): that a particle’s properties such as momentum and spin are well-determined, and spatially localized to the particle.”

They may be taking that too far. What if some local variables are set, and others are not? Then you could have some variables set locally and the information travels at lightspeed or slower, and later is revealed. But other things could be strictly probabilistic and could be set later.

Then it might turn out that nothing spooky happens, and at the same time it could definitely be shown that some events cannot be determined by local variables.

Smith and Jones are physicists at the same university. They both own red Ferraris and it is impossible to tell the cars apart by satellite imagery. Smith lives to the north and Jones lives to the south. So it is predictable that whenever the satellite photos show a red Ferrari going south from the university, the other will go north. By satellite studies we cannot tell whether these Ferraris have hidden variables (namely Smith and Jones) or whether they are merely entangled. Maybe the choice which of them will go south is never made until one of them actually turns, and that information is then instantaneously transmitted to the other one so it knows to turn the opposite direction. (But *we* know that it’s really Smith and Jones, the hidden variables, and they don’t decide completely at random while on the road which will go home to Mrs. Smith and which to Mrs. Jones.)

But as it turns out, there are nine red Ferraris at the university. Two homes are more or less to the northeast, two to the northwest, three to the southwest, and two to the southeast. So when one red Ferrari goes south you can’t be sure the next one will go north, though the physicists are somewhat likely to leave around the same time because of departmental meetings etc.

In reality, it occasionally happens that Smith and Jones both go to Smith’s house. Sometimes a paper they are working on together is approaching deadline and they work late into the night. Occasionally they go bowling together. Just possibly they might occasionally swap wives — but only after near-instantaneous phone communication with both wives to get their approval. Then the red Ferraris go the opposite directions, but by satellite imagery you’ll never know….

http://en.wikipedia.org/wiki/Hidden_variable_theory

http://en.wikipedia.org/wiki/Local_hidden_variable_theory

These various links do not at all make it obvious why local hidden variables are impossible, or even that there is an example where the result has to be spooky because local hidden variables cannot apply to that example. They give some of the details of the arguments, but do not show how those arguments fit together to forbid the existence of any local hidden variables though they claim there can never be any local hidden variables.

Non-locality itself is widely accepted, non?

Interesting, I will have to relearn this again. Actually entanglements are the ‘spookiest’ thing(s) I know of, and gives me one of the biggest headaches too 🙂 I will need to reread it all. But if we take the simple definition when you downgrade one ‘photon’ into two ‘entangled’ we already have proofed that they ‘know’ each others spin, instantaneously.

Assume they are ‘the exact same’. How does that fit with ‘locality’? Both ‘the arrow’ and radiation are local phenomena to me, always the same locally. Maybe the arrow is another name for ‘motion’, meaning that if it is local it has to spring from something ‘jiggling’. But it doesn’t answer ‘time’, as a notion from where that ‘jiggling’ can come to exist, if you see what I mean? to have a ‘motion’ you need a arrow as I see it, as it is through the arrow that ‘motion’ finds its definition. Maybe entanglements is what the universe really is? ‘Motion’ becoming the way we observe it through?

Hmm, and now I’m getting mystical again

Sorry, I will have to blame it on it being ‘the day after’, after Friday I mean 🙂

Another thing that’s confusing is this statement.

“The violation of Bell’s inequalities in Nature implies that either locality fails, or realism in the sense of classical physics fails in Nature, or both.

When one looks at other types of data, it becomes totally unequivocal that locality holds while classical realism fails in Nature.” by Luboš Motl

I can see that locality holds, if I by that mean what we measure directly, it’s sort of obvious. But isn’t an entanglement a ‘space like’ separation, although a ‘instantaneous’ correlation, in a observation? And by that also becoming a ‘non local’ phenomena?

The point being that you can’t know what the polarization will be for any of the space separated objects until measuring on one of them, only that they will be opposite. That is, there is no ‘standard’ to any entanglements polarization other than this ‘oppositeness’ we expect. That you can’t say which state/polarization ‘A’ have until measured? And as you can’t specify that you also can prove that two separated ‘particles’ in space then must ‘know’ each other, or is it something more I’m missing there?

Yoron, we cover the same material repeatedly.

When two entangled photons are created, we know that their polarization is related but we don’t know what the polarization will be.

There are two obvious ways to look at that. Maybe the polarization is set when they are created, and we don’t know what it is — it is a “hidden variable”. Or maybe the polarization is not set until one of them is measured, and then the other one instantaneously gets its polarization set too in violation of lightspeed etc.

At first sight there’s nothing that says one of these ideas is better than the other. But somehow physicists know that the first is impossible and the second must be true. I have not yet seen any explanation about the argument why the first is impossible, but a whole lot of physicists say they know it’s impossible according to quantum theory and also there are experiments which show it.

I don’t understand it yet.

Long term, I’m going to try and go through and understand in more detail the whole “hidden variable” argument and blog about it, so hold tight! I should note that, as said earlier, “hidden variables” are not completely excluded but Bell’s theorem and the battery of experiments more or less confirming it strongly suggest that local hidden variable theories are inconsistent with observations. Physicists who really study this stuff carefully haven’t excluded the possibility of nonlocal hidden variable effects.

Yes, I know. Wasn’t it John Bell that first proved statistically that there could be no classical ‘hidden variables’. [url=http://www.quantiki.org/wiki/Bell%27s_theorem]Bell’s theorem.[/url] I don’t believe in any FTL communication myself, not macroscopically anyway. But neither am I sure what a ‘distance’ is, and that goes for both QM and ‘SpaceTime’.

Yes, I’m afraid we do.

It’s me trying to see it from the start, and keeping it as simple as possible.

[url=http://www.quantiki.org/wiki/Bell%27s_theorem]Bell’s theorem[/url] is what proves it statistically. It states that a classical solution isn’t possible, assuming that there is local ‘hidden variable(s)’ although still opens for the possibility of non-local variables, as FTL ‘communication’. But FTL would be a violation of causality in where we would get improbable effects from some frames of reference, aka you answering me before I’ve even asked, according to relativity. So, macroscopically FTL is a strict ‘nono’ as far as I understands it. That leaves us the question how the geometry of the universe can change with relative motion and mass, relative the observer?

And that’s where I wonder, as I don’t expect FTL to be allowed macroscopically, But then again, I may all too easy be wrong 🙂

Sorry, my first reply didn’t show up, until after I had posted the second?

The first one ended up in the spam folder for some reason — I just “approved” it. Sometimes frequent commenters will trip the filter!

Eh, by ‘macroscopically’ I just meant ‘SpaceTime’ here, and relativity, nothing more. We have two views, one is QM, the other is Einsteins relativity. Some physicists try to join them, most maybe? I’m not sure there. One discuss ‘superpositions’ etc, and statistics creating probabilities. The other discuss linear functions mostly, involving macroscopic as well as microscopic causality-chains, following an ‘arrow of time’. You can use radiations speed and ‘motion’ as a microscopic example of our ‘classic’ causality chains, and planets orbits as an example of macroscopic causality chains.

Oh, thanks, wish there was a way to edit and also remove a double post though. It looks silly with two posts stating the same 🙂

And, thinking of it. Both QM and Relativity assumes an arrow of time existing. Otherwise you can’t have statistics, as there would be no order from where you could base your expectations in quantum mechanics.

“Imagine putting electron number 1 in atom number 1 and electron number 2 in atom number 2. Well after waiting a while it doesn’t anymore make sense to say that “electron number 1 is still in atom number 1″. It might be in atom number 2 now […]”

Seems like this guy needs to read again his Hartree-Fock method for solving Schrödinger’s equation for a multielectron system; not to mention how to build a Slater determinant for a 2 electron system.

Then again, lets just hope some kids listen to his lectures, become interested in science and eventually realise he is just (way) overextending some metaphors. Pseudoscience propagates very fast through media like the internet basically because it doesn’t require to think/test/prove anything; it just requires you to believe in the premises and flow along the dodgy logic with which the conclusions are weaved. Efforts like yours and other bloggers, such as the ones you mentioned in your post, will counteract this propagation and proliferation but only in time. I don’t know what is worse: A country like mine (Mexico) where science is disregarded and neglected or the USA where science is even outlawed (well, not exactly but you know what I mean) like in the infamous Kansas School Board case! I mean, nowadays it seems like any politicians stand on evolution should be part of his campaign platform! Ridiculous.

Congratulations on yet another wonderful post.

Thank you for the comment, and the compliment! Indeed, pseudoscience flows far too quickly through the media and the political systems. It’s not even a new problem, really; the use of wordplay to justify a “scientific” conclusion reminds me of this comment from an article criticizing perpetual motion back in the late 1800s:

Thanks for this patient and layman-friendly rebuttal. Just seen the lecture for first time in a 2013 repeat. Not a physicist but thought the final claims were rather extravagant. Annoying the bbc just repeats the programme as if its not controversial.

You’re very welcome! Yeah, it is rather irritating. Part of the point of science is admitting when an argument isn’t quite right and revising it accordingly, something that hasn’t been done.

It’s nice rereading this one again. Reminds of all the things I don’t understand 🙂

The reason why this simple transparent mirror effect can’t be simply explained as a ‘set variable’ constructed by the mirror is that by probability that wave function can’t be set, until measured, if I remember right? And it is experimentally proved (as I remember it) that there is no way to know, until measured, which polarization the measured particle will have.

You can argue that for identical experiments, but with no way to determine how that mirror will ‘influence/polarize’ the photon you measure, there must be a hidden variable? Or you can define it such as the two photons are ‘linked’ in a ‘spooky action at a distance’. But to define that ‘hidden variable’ craves a clearer mind than mine, not that it ever is that clear:)

How would a hidden variable exist? Assuming ‘identical experiments’ giving you different polarizations from identical photons? If I now remember this correctly.

A crazy thought, how does a photon see the universe?

Does it see it, or is it just us seeing?

Then it comes down to the arrow.

A photon “sees” the Universe as a flat 2D plane. Since it takes no time to travel anywhere, everything is at the same “depth”.

1. photons are timeless (as far as physics know experimentally)

2. Lorentz contraction as observed in the direction of motion should in the case of a photon reach? Infinite contraction, or is there a limit where you can assume a point like existence?

3. What would happen to a signal from a relativistically moving object, sent in the opposite direction from its motion? It would redshift (waves), and in the case of a photon? Would it warp?

And the redshift itself then, is there a limit to how red shifted something can become relative the moving observer?

I could assume that there must be a limit, as I can imagine a stationary (inertial) observer able to watch that ships signal, but I’m not sure, although it seems a contradiction in terms.

If there are no limits to a redshift, what would that imply in the case of those two observers?

Or?

The redshift produced by the motion seen from the moving object will still be at ‘c’.

And the stationary observer should see it redshifted too, at ‘c’ from his point of view too.

This is assuming a reciprocal effect relative ‘c’, different coordinate systems, and energy.

But then you have the light quanta itself, that shouldn’t change intrinsically?

I am glad to see you are excited about this stuff 🙂

I know.

Sometime one just have to let go

But, it is confusing 🙂